Scaling the Internet Infrastructure

Following on from my previous article about the oncoming tidal wave of online HTTP video and its impact on the Internet infrastructure, I reviewed a software solution that can help address this challenge.

One area that’s really important to understand is the direct correlation on video quality with content popularity, such as in the example of watching NFL football or Film and just as you get to a good point, your client starts buffering. As a consumer you have limited choices except to vote with your feet. As a content provider, you need to ensure your infrastructure meets both current peak and future demands, because when a flash crowd occurs the customer will blame you, rather than a CDN which is an essential part of the video delivery chain.

What are the requirements for a good video delivery solution?

With any online HTTP video delivery solution, there are three essential things required to minimise video buffering.

- Foremost it must be really fast and run at network wire rate to leverage available server hardware.

- Furthermore it must scale linearly so that we can increase performance with additional units.

- Lastly it must be a software only solution deployable quickly in the cloud or on premise.

Introducing aiScaler

aiScaler is a commercial high performance HTTP caching proxy server. It achieves this as a memory based cache, using asynchronous polling technology within the Linux kernel, and avoids disk except when used for logs and configuration. This makes it ideal for live video streaming applications such as Apple’s HTTP Live streaming, MPEG Dash, Microsoft Smooth and Adobe Flash. To prove the marketing and validate the performance claims I prepared a set of live video benchmarks.

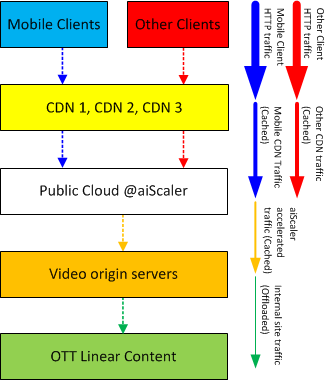

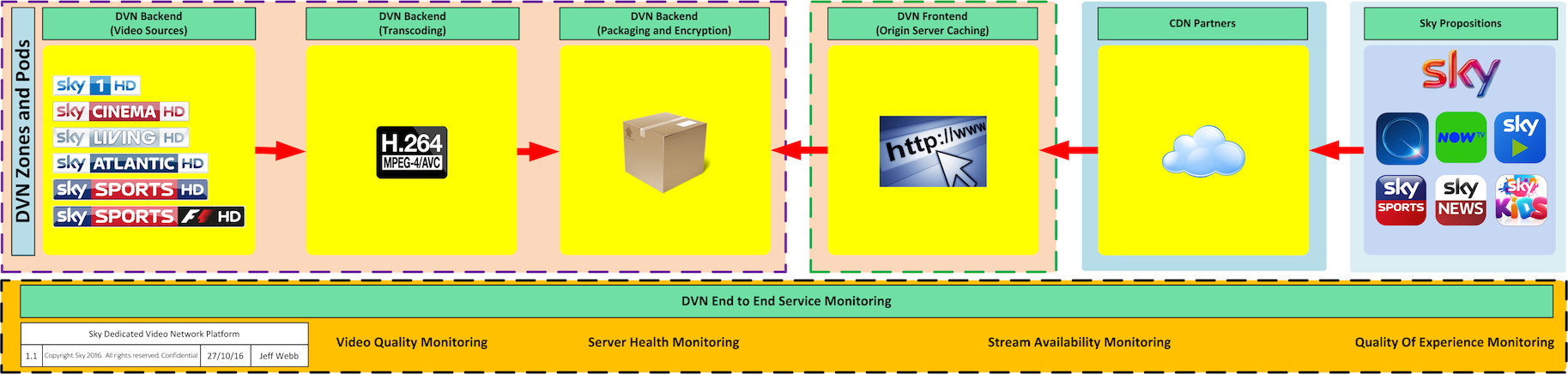

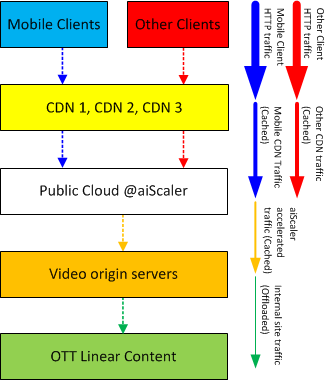

Recommended Architecture

My recommendation is to deploy aiScaler directly in front of your origin server platform, assuming that you’re already using a CDN to serve video content to customers, as this provides the best possible performance with added DDoS security protection. The high level architecture diagram below provides an example of this:

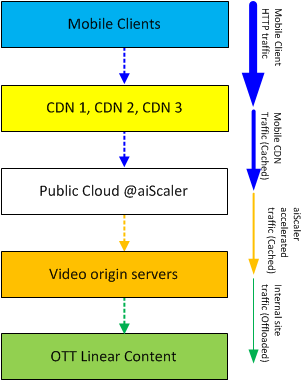

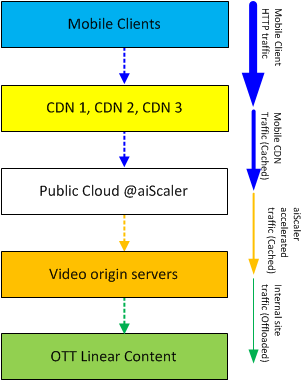

Test Architecture

The aim of benchmark testing is for maximum throughput! without errors which can cause buffering, and so I removed CDN’s from the test scenario. The following high level architecture diagram shows the test scenario for Mobile HLS video:

aiScaler Test Results

The test scenario was made up of 2500 synthetic mobile HLS users based in North America and Europe. Notice that I am not using a CDN but directly testing aiScaler as the origin cache layer, with public cloud provider CenturyLink.

- Total CenturyLink bandwidth consumed in 30mins was 973GB.

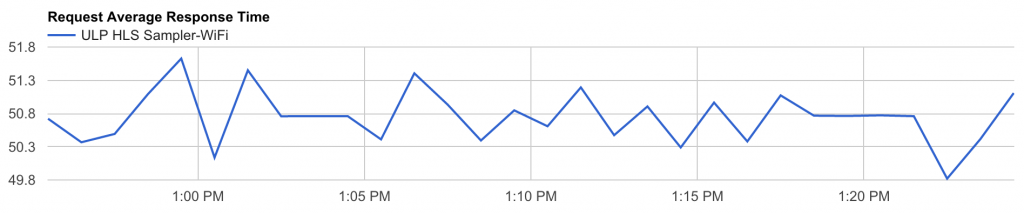

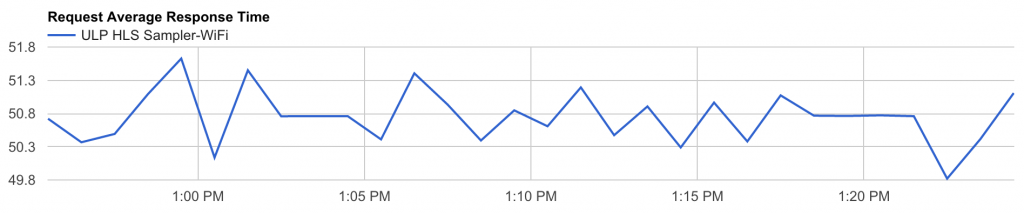

- aiScaler reduces client buffering, resulting in a smoother customer video experience as shown in the average response graph below.

- DNS Time To Live set to 1 min for fast failure detection.

Average response time is a way of measuring how long the live video takes to download and should remain relatively flat, to avoid client buffering issues. The above graph shows that during the test a variance of around 1.5 seconds was achieved. The test results demonstrate that aiScaler scales linearly to support multiple CDN partners concurrently which provides greater resilience.

Test Results Explained

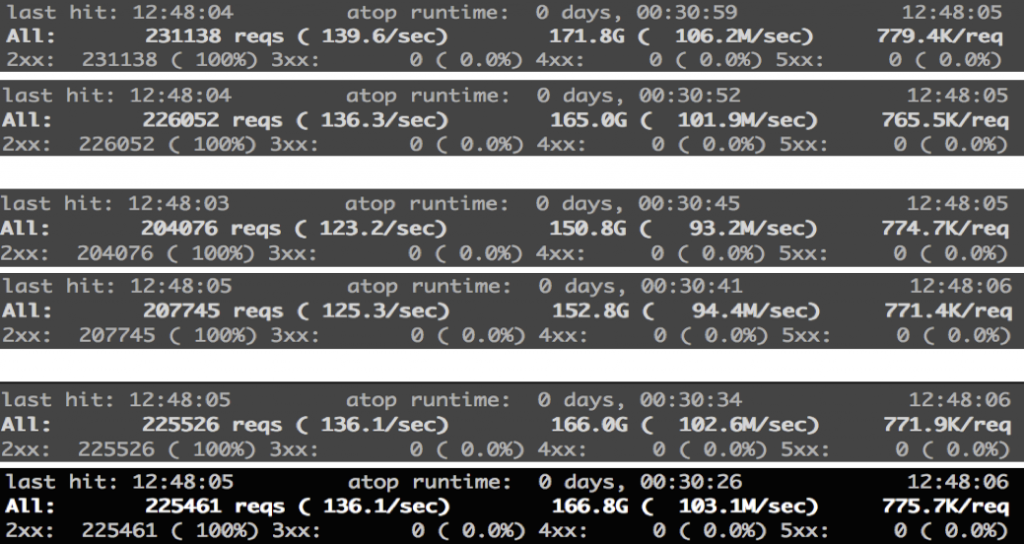

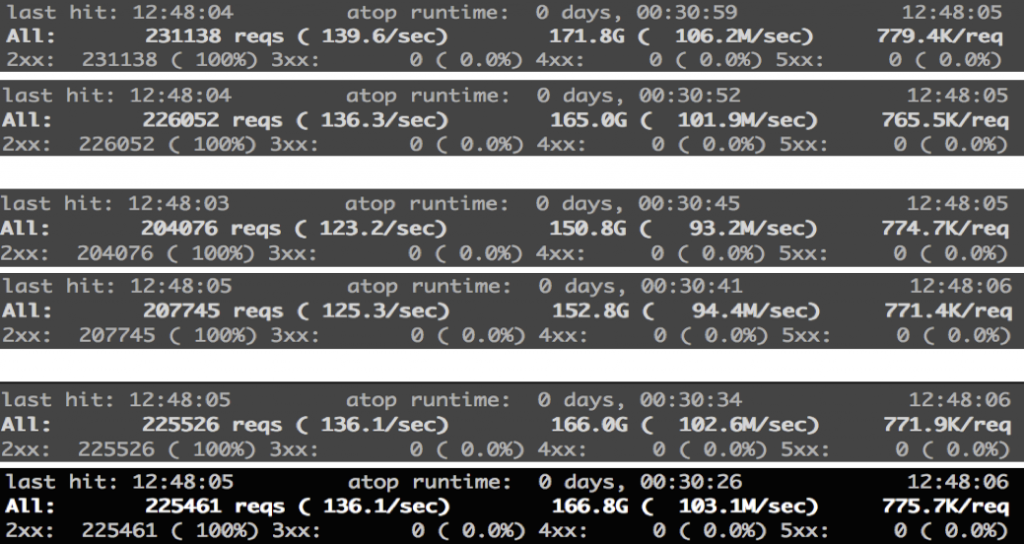

During the test results I captured a lot of data from clients and from aiScaler, the following results are the most interesting.

- All data was captured at the end of a 30 minute test run.

- Tests were run three times and results averaged.

- All HTTP 200 OK’s between 120-140 requests per/sec.

- No HTTP 403/404 errors were observed.

- No HTTP 5xx errors were observed.

- Each aiScaler instance achieved wire rate 1Gbps error free, which proves CenturyLink have a great cloud platform.

- CPU usage on the aiScaler instances reached 50% with 2 CPU’s and 4GB memory.

- We could have achieved faster throughput as the only bottleneck was the instance type limiting throughput to 1Gbps.

- aiScaler has been independently tested in excess of 9Gbps on a single Intel Xeon based server.

Test Conclusions

The results were encouraging as they proved that Multi Gigabit throughput could be achieved across multiple CenturyLink data centers with no errors. Delivering online video over the top (OTT) content at scale is challenging, without a combination of excellent caching software and a good cloud platform. Security is also a major factor in any online service and I enabled aiScaler’s automatic DDoS protection during the testing period.

Summary

I was able to prove that by deploying aiScaler on a public cloud provider, wire rate performance of 1Gbps can be achieved on a single instance. If you require more than 1Gbps simply choose a different instance type or run multiple instances geographically dispersed for resilience.

Deploying aiScaler was a straightforward process and took less than 15 minutes. In testing it has proven to be very capable of serving online video at very high throughput and I’d recommend you seriously evaluate it for your business needs.

Jeff

My first public speaking event as Principal Streaming Architect came at the Content Delivery World conference in November 2016, where I presented on “Innovations in Live Streaming to Multiple Platforms”.

My first public speaking event as Principal Streaming Architect came at the Content Delivery World conference in November 2016, where I presented on “Innovations in Live Streaming to Multiple Platforms”.

Recent Comments